/XA:H makes robocopy ignore hidden files, usually these will be system files that we’re not interested in./Z ensures robocopy can resume the transfer of a large file in mid-file instead of restarting.For across-network share operations this seems to be much more reliable - just don’t rely on the file timings to be completely precise to the second. This means the granularity is a bit less precise. /FFT uses fat file timing instead of NTFS.Beware that this may delete files at the destination. /MIR specifies that robocopy should mirror the source directory and the destination directory.I ended up with the following command for mirroring the directories using robocopy: robocopy \\SourceServer \Share \\DestinationServer \Share /MIR /FFT /Z /XA:H /W:5 As source and destination became larger and larger, more memory was required to perform the initial comparison - until a certain point where it’d just give up. ViceVersa would initially scan the source and destination and then perform the comparison. In comparison to ViceVersa, robocopy goes through the directory structure in a linear fashion and in doing so doesn’t have any major requirements of memory.

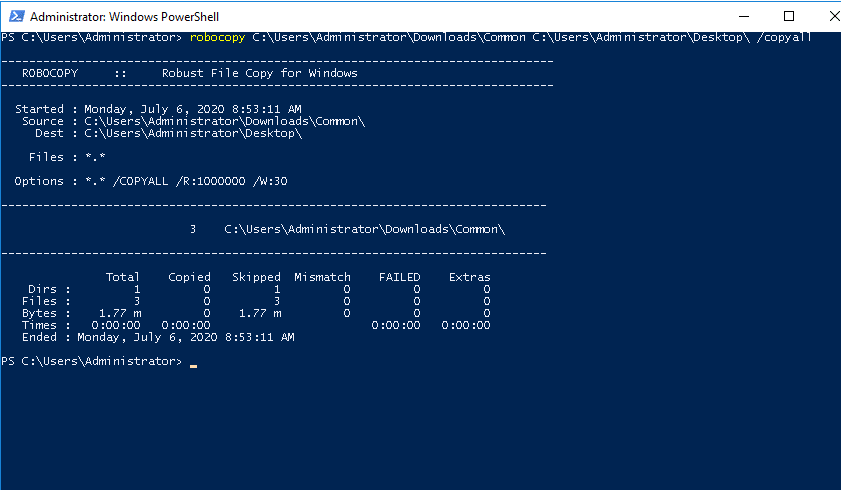

That means it’ll not just copy files, it’ll also delete any extra files in the destination directory. What makes robocopy really shine is not it’s ability to copy files, but it’s ability to mirror one directory into another. That means robocopy will survive a network error and just resume the copying process once the network is back up again. If an error occurs it’ll wait for 30 seconds (configurable) before retrying, and it’ll continue doing this a million times (configurable). You give it a source and a destination address and it’ll make sure all files & directories from the source are copied to the destination. The basic syntax for calling robocopy is: robocopy ] It’s run from the command line, though there has been made a wrapper GUI for it. Robocopy (Robust File Copy) does one job extremely well - it copies files.

#Robocopy sync folders windows#

For server 2003 and XP it can be downloaded as part of the Windows Server 2003 Resource Kit Tools. Robocopy ships with Vista, Win7 and Server 2008. Sometime later I was introduced to Robocopy.aspx). This quickly grew out of hand as the number of files increased. Hence I started taking backups of MyShare/A, MyShare/B, etc. Their forums are filled with people asking for solutions, though the only suggestion they have is to split the backup job into several smaller jobs. Apparently it was simply unable to handle more than a million files (give or take a bit).

Some time later ViceVersa stopped working. It worked great for some time although it wasn’t the most speedy solution given my scenario.

#Robocopy sync folders pro#

I started out using ViceVersa PRO with the VVEngine addon for scheduling. Finally I’d only have access to the files through a share so it wouldn’t be possible to deploy a local backup solution that monitored for changes and only sent diffs. All changes would be additions and deletions - that is, no incremental updates. While seemingly simple the only hitch was that there would be several thousands of files in binary format, so compression was out of the question. Recently I needed to implement a very KISS backup solution that simply synchronized two directories once a week for offsite storing of the backup data.

#Robocopy sync folders Pc#

I’ve used it for synchronizing work files from my stationary PC to my laptop in the pre-always-on era (today I use SVN for almost all files that needs to be in synch). On numerous occations I’ve had a need for synchronizing directories of files & subdirectories.

0 kommentar(er)

0 kommentar(er)